Experience & Expertise to Fit Your Actuarial Needs

Actuarial work is more than numbers. It’s about understanding our clients’ industries, their particular needs and challenges, and helping address them with actuarial and business experience.

Our consultants are more than actuaries, as they bring a wealth of experience both internally and externally. Internally, our consultants are experienced in supporting all sides of the industry, be it insurers, state regulators, self-insured entities, and more. Externally, many of our consultants are or have been chief actuaries, underwriters, data scientists, and risk managers.

Our actuarial and business approach covers virtually all lines of property, casualty, health, life, disability, and long term care exposures.

Actuarial Solutions

Learn more about what our experts can do for you.

Property & Casualty Actuarial Solutions

We specialize in property and casualty actuarial pricing and reserving, product development and compliance, mergers and acquisitions, risk management, and broader actuarial consulting.

Life & Health Actuarial Solutions

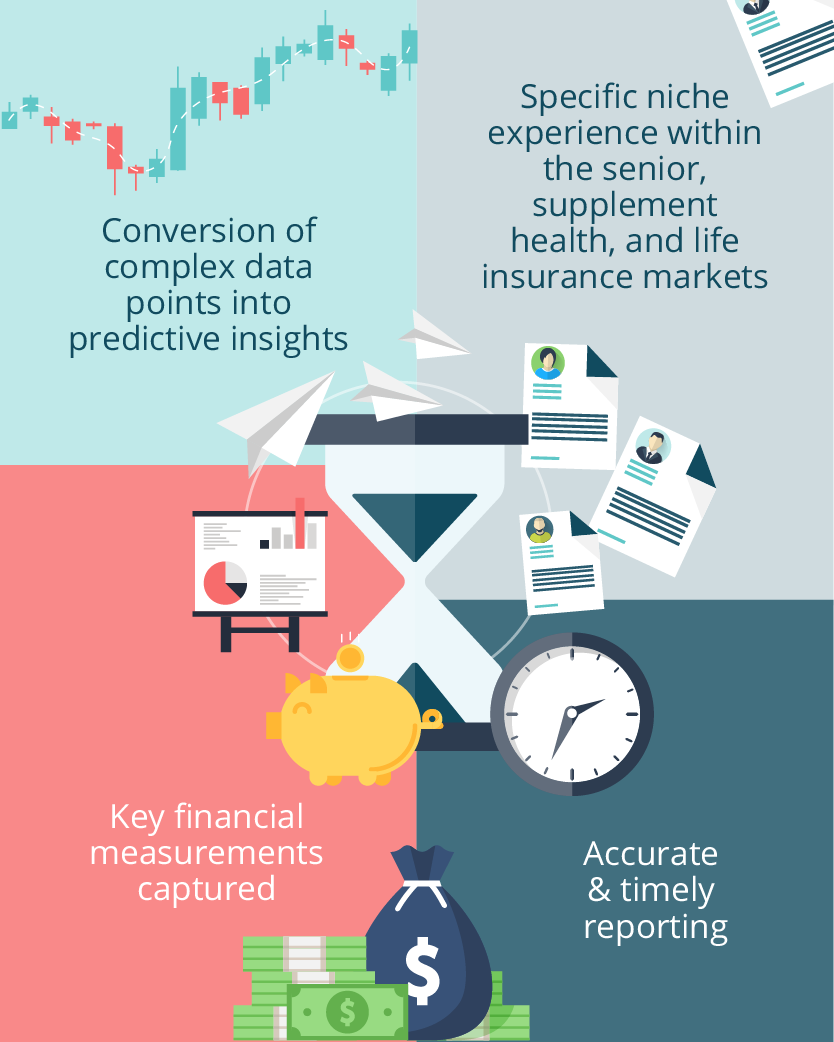

We specialize in all areas of actuarial consulting including pricing and product development, valuation and financial reporting, actuarial modeling, experience analysis, rate increases, mergers and acquisitions, and more. Specific product line support includes senior market, supplemental health, and life insurance.

Product Development & Compliance

Our team of experienced product development, regulatory compliance, and actuarial specialists are ready to handle your product needs. Our team is ready to assist in assessing the profitability of your current products, expanding product offerings into new states, developing new products or coordinating annual rate filings for all lines of business including personal, commercial, life, health, and workers’ compensation lines.

How We Are Unique

Supporting our clients is more than just finding the right number. We use all of our available faculties to analyze, discern, and then communicate our findings so that our clients can address concerns and achieve goals.

- Right Tools and Right Understanding. We have a wide range of tools and technology with the right experience to provide solutions specific to your industry, objective, and intended audiences.

- Understandable and Usable. Clients aren’t looking for esoteric, theoretical answers but solutions that are understandable and actionable. It is our job to make sure that our clients understand and can act upon the support we provide them.

- Persistent. The right solution for a client is one that is complete. We will not stop until a robust solution has been isolated for our client.